Introduction

In other posts I have outlined a general theory of systemic functional multimodal discourse analysis. Based in Halliday’s systemic functional linguistics (SFL) and adapted to analyze alternative semiotic systems such as image and sound by O’Toole, Kress, van Leeuwen, and others, SF-MDA applies the metafunctions, the ideational (experiential and logical), interpersonal, and textual to an analysis of images and screen-based texts.

I have to admit, I am still digesting O’Halloran’s article. She goes into an extremely complex level of detail, adapting the nuances of SFL to an analysis of imagery. Specifically, O’Halloran’s work deals with intersemiosis, the meaning created by the combination of multiple semiotic modes, especially the meaning that arises through the juxtaposition of image and text. This is an important field of research, as screen-texts almost always combine imagery with typography, and developing theoretical frameworks for understanding this new interpretative space is essential to multimodal research. I will explore some of these frameworks as well as discuss O’Halloran’s suggestion that multimodal texts can be effectively analyzed by using software such as Photoshop.

Analyzing Text and Images

While there has been a lot of research into using SFL to analyze an image or text, multimedia requires we develop Cross-Functional Systems, frameworks that can interpret, for instance, meaning created from the combination of image and text.

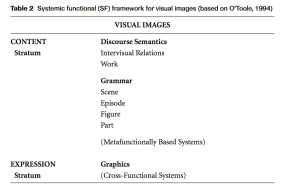

A basic theory of SFL is that language is divided into two stratums: content and expression. The content of language consists of lexicogrammar, the combination of words, word groups, clauses, clause-complexes, and mechanics, while the expression stratum consists of letters and phonology and is the way in which language is expressed.

O’Halloran adapts the content and expression stratums to imagery, especially the intersemiotic complementarity, or combination of multiple semiotic modes (see table 1).

Here the content stratum is broken into discourse semantics and grammar. Like the discourse level of language, which deals with large bodies of text, the discourse semantic level in this framework looks at visual images as a whole. At the more detailed level of grammar, the framework examines the scenes, episodes, figures, and members of an image. The expression plane deals with graphics and arrangement, which convey the content to the viewer. The expression stratum requires a Cross-Functional framework because this level combines several semiotic modes to create cohesive meaning – graphics, color, image, text, etc.

A Framework for Analyzing Advertisements

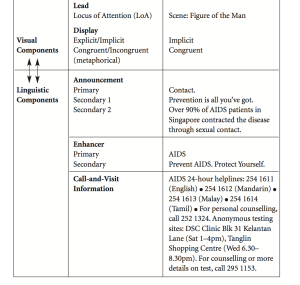

I have found table 2 useful for the analysis of advertisements. The table breaks advertisements into their visual and linguistic components. The visual component consists of the Lead, the Locus of Attention (LoA), the most salient figure in the advertisement, and the Display, the way in which the figure is displayed. Secondly, the table analyzes the linguistic components of the ad. Advertisements usually have an announcement followed by primary and secondary text. An ad may also have an enhancer, text which enhances the primary announcement as well as call-and-visit information, which displays website, contact information, etc.

Analyzing Multimodal Texts with Digital Technology

O’Halloran suggests using digital technology such as Photoshop to analyze multimodal texts. Teachers, researchers, and students could import an advertisement into Photoshop and annotate the text as well as experiment with color saturation, light, tint, and other features. O’Halloran has since developed multimodal analysis software, but I have not yet evaluated it. Other multimodal annotation software includes ELAN.

I find both aspects of O’Halloran’s article, the need for frameworks to interpret intersemiosis as well as the use of digital technology to interpret and annotate multimodal texts, important for my work. I would most likely test out multimodal annotation software before using it in a class, but the use of multimodal annotation software brings up several questions, the most important being how to address the time and resources required to use it in the classroom. What is the educational value of using digital technology to analyze multimodal texts? At this time, I am unsure of the answer to these questions. However, using annotation software in the classroom definitely reinforces a design-based pedagogy and gives students experience with digital tools.

Works Cited

O’Halloran, Kay L. “Systemic Functional-Multimodal Discourse Analysis (SF-MDA): Constructing Ideational Meaning Using Language and Visual Imagery.” Visual Communication 7 (2008): 443-75. Web.